Why Accessibility is the Only Constant in a Fragmented World

By Jerlyn O'Donnell, CPACC

Back in the early 2010s, the design world was paralyzed by a specific anxiety: screen resolution. The iPhone had defined a 320-pixel standard, but the real world was messy with Blackberrys, Nokias, and burgeoning Android devices.

In an article I wrote at the time, I urged designers to stop obsessing over the dominant device and start thinking about the human context. I wrote:

Consider this: I use a GPS watch almost daily... I've often asked myself: Why isn't there content on it to influence our buying habits? Sure, it's a tiny screen, but as a designer, I can't help but imagine how I could manipulate its near stamp-sized dimensions to reach a completely new, very targeted audience.

At the time, I didn't have language for what I was describing. "Internet of Things" wasn't yet common terminology. But I recognized that connected devices would proliferate, each with distinct constraints and contexts. The runner's watch was just the beginning.

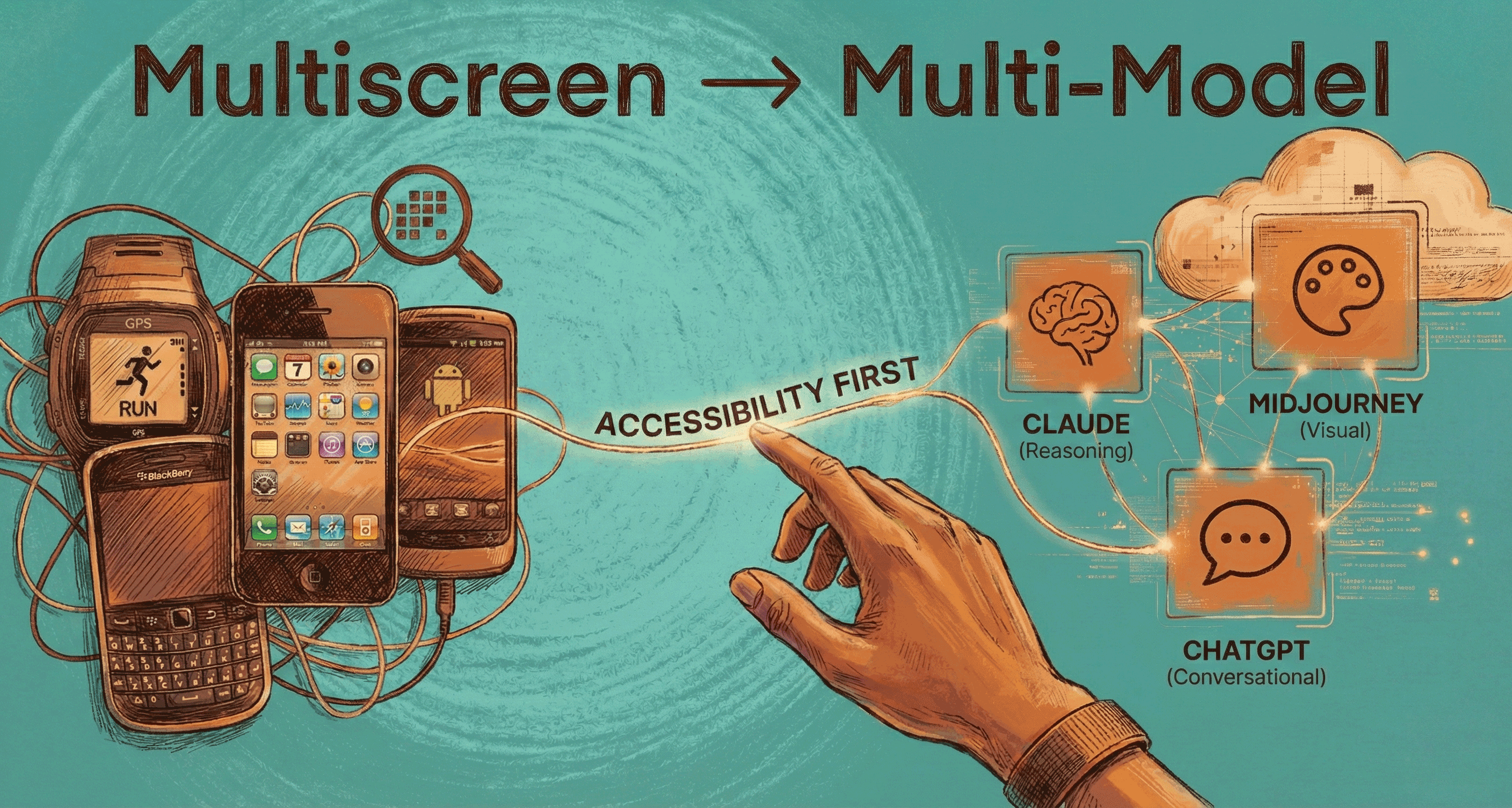

Today, through my #genAI365 project, I see history rhyming. The "Multiscreen" anxiety of yesterday has evolved into the "Multi-Model" reality of today.

The New Fragmentation from Pixels to Parameters

Just as we used to juggle screen sizes, we now juggle intelligences. We are entering a phase of intense model fragmentation.

In my daily immersion in generative AI, I rarely rely on a single platform. I might use Claude for deep analytical work or coding assistance because of its larger context window. I'll shift to FireFly for high-fidelity visual conceptualization. I'll utilize Gemini for rapid iteration or data synthesis.

We used to ask, "Does this look right on an Android?" Now we must ask, "Does this output make sense across different cognitive models?"

If we design an experience that only works if the user has a paid subscription to the latest, greatest GPT model, we are making the exact same mistake we made in 2010 by designing only for the newest iPhone. We are confusing the tool with the user's goal.

When I build apps for analysis, I ensure they work whether the user queries Claude, ChatGPT, or local models. The semantic structure, not the AI provider, determines success. A focus path tracker that only works with GPT-4's output is as broken as a website that only renders on Safari.

Accessibility First is the Unchanging North Star

The thread connecting my early work on multiscreen environments to my current work with AI is my certification as a Certified Professional in Accessibility Core Competencies (CPACC).

In the multiscreen era, "accessibility" meant ensuring your content was legible on a 240-pixel wide screen so you didn't alienate a demographic that couldn't afford an iPhone. It was about economic and technical inclusion.

In the multi-model AI era, the stakes for accessibility are exponentially higher.

If a generative model is trained on biased data, it produces exclusionary outputs. Research by Buolamwini and Gebru demonstrated that commercial facial recognition systems had error rates up to 34% higher for darker-skinned women compared to lighter-skinned men (source: "Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification," 2018). More recent studies on image generation models like DALL-E and Midjourney show persistent bias in representations of professionals, reinforcing harmful stereotypes about race and gender (source: Stanford HAI, "Auditing AI Image Generators," 2023). These aren't edge cases. They're systematic failures that erase entire cultures and perpetuate exclusion.

If accessing AI requires mastering "prompt engineering" syntax, we've created a new literacy barrier that locks out non-technical users entirely. This is the digital divide, version 3.0.

As an Experience Design Director with accessibility certification, my stance is clear: If it isn't accessible, it isn't finished.

Accessibility isn't a compliance checkbox at the end of a project; it is the foundational constraint of good design. Whether we are projecting an interface onto a wearable screen or generating code via an LLM, we must ask: Who is this excluding? Are the outputs semantic and screen-reader friendly? Is the training data representative of the multitudes we serve?

Model-agnostic design means structuring outputs semantically (proper HTML hierarchies, alt text, ARIA labels) so they remain accessible regardless of which AI generated them. In my consulting (Design Lady LLC), I train custom LoRA models for clients while ensuring the outputs maintain accessibility standards regardless of base model. The semantic structure persists even as the generation mechanism evolves.

The Return on Inclusion

My argument years ago was that "thinking multiscreen" was a win-win for ROI because it reached previously unreachable consumers.

The same holds true for Multi-Model, Accessibility-First thinking. By designing systems that are model-agnostic and inherently inclusive, we future-proof our work against the rapid churn of technology.

Technology will always fragment. Screens will change sizes; AI models will change capabilities. The only sustainable strategy is to anchor our work in the unchangeable reality of human diversity.